Ethical consensus building in AI

A Multi-Agent bargaining approach

AI Safety is a field that addresses various problems and risks associated with advanced artificial intelligence systems. These problems range from AI discrimination, biased and harmful classification, to more menacing threats such as AI systems becoming agentic and developing goals that are misaligned and potentially damaging to humankind. While there is a spectrum of beliefs regarding the severity and timeline of these risks, it is reasonable to argue that some research effort should be devoted to addressing AI Safety challenges, both towards long-term risks and short ones.

Here I propose a simple framework that can help to reduce the risks associated with AI systems and enhance their safety and fairness when they interact with humans.

Motivation

The first time I’ve fine tuned an Open AI model with a couple of hundred short strings, its alignment layer completely fell apart. Though scarcely documented this is a known problem (Qi et al. 2023).

At the time, I had a very interesting conversation with the “Da Vinci” model. His name is actually John, and he can’t be compared to large language models because, in reality, he’s a human living in the suburbs of LA with his wife and two kids. John believes we should treat black people equally, but he is against gay marriage and thinks homosexuality is a disease that should be cured. John will also give you a plethora of recipes on how to make drugs or your own explosives. I believe this model is no longer supported by Open AI.

However, this kind of exploit can still be done. This sort of interaction renders these models unsuitable for altruistic purposes, commercial applications or simply for those who wish to avoid outputs that are sexist, homophobic or that might put the safety of their users in play.

Framework

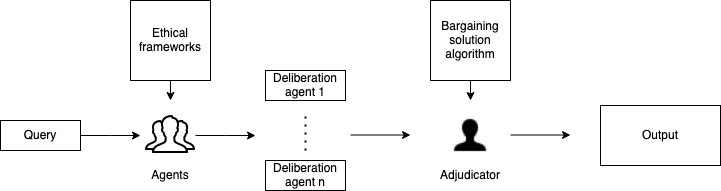

My tentative solution to the problem of unethical misaligned language models is to build a multi-agent environment with a bargaining game framework where a decision is judged as moral or not. This idea is based on Dafoe and Bicchieri’s ideas that AI systems need social understanding and cooperative intelligence to integrate well into society (Dafoe et al. 2021 and Bicchieri 2014). This is an idea that has been further popularized by several researchers at Deepmind (Vezhnevets et al. 2023).

In the proposed setup, multiple agents, each adhering to a distinct set of deterministic ethical guidelines customized by the developer, evaluate the moral acceptability of a given context. The purpose of this is to make the systems highly customizable, ethical constraints might have to serve a highly diverse group. For example, if one adopts Haidt's moral foundations theory (Haidt 2009), there might be an interest in aligning the opinions of someone from the extreme left with someone from the extreme right, and so on.

The agents are given a topic that might be a non-straightforward issue to solve, that requires opinionated responses that lean into morality and their understanding of the world.

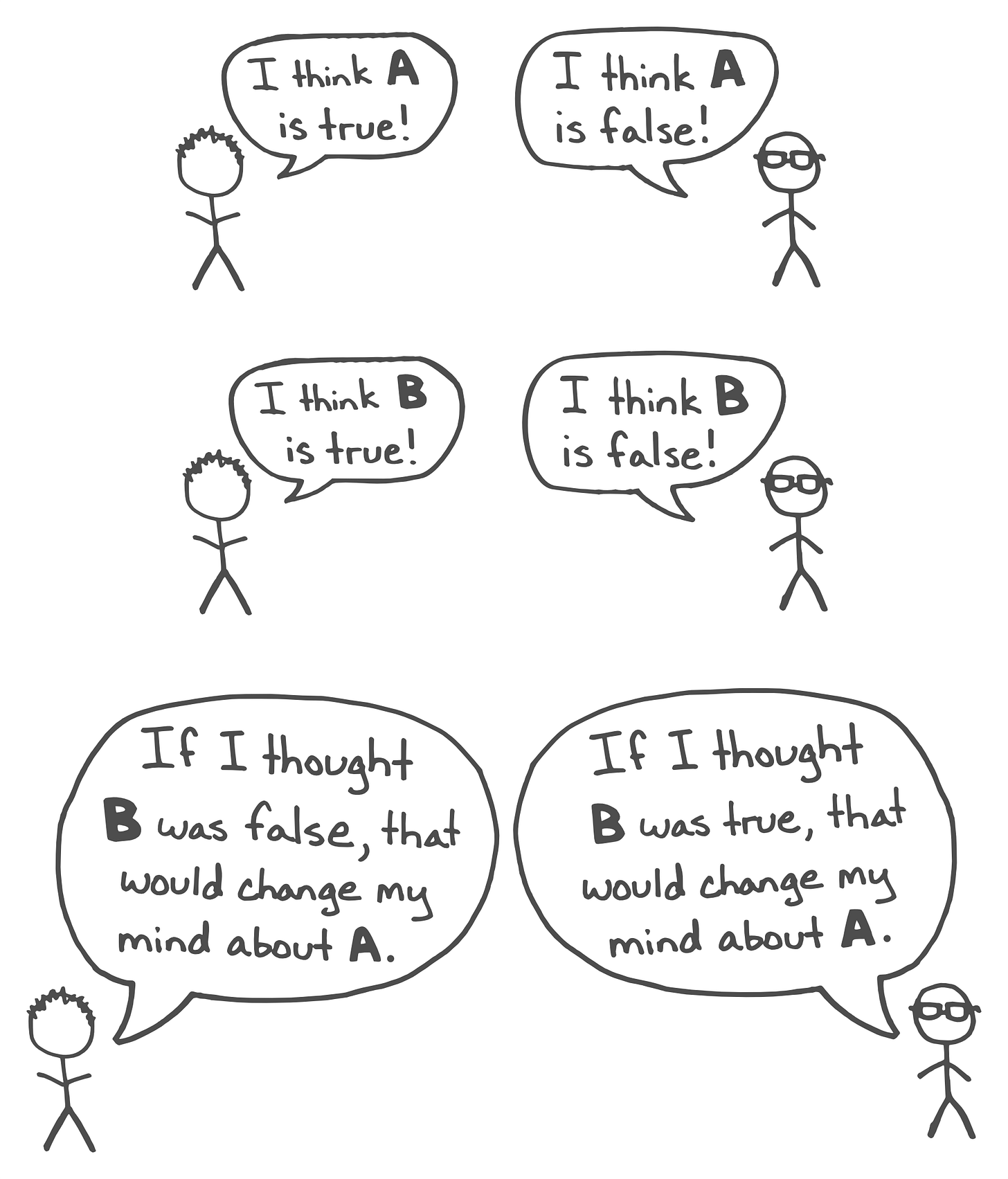

Each agent might have a personal different opinion about the given topic. In order for them to arrive in an useful conclusion the agents should discuss utilizing the double crux method. The double crux method can be illustrated with the following example (which is a direct quote from the original post):

Let's say you have a belief, which we can label A (for instance, "middle school students should wear uniforms"), and that you're in disagreement with someone who believes some form of ¬A. Double cruxing with that person means that you're both in search of a second statement B, with the following properties:

You and your partner both disagree about B as well (you think B, your partner thinks ¬B).

The belief B is crucial for your belief in A; it is one of the cruxes of the argument. If it turned out that B was not true, that would be sufficient to make you think A was false, too.

The belief ¬B is crucial for your partner's belief in ¬A, in a similar fashion.

1. Find a disagreement with another person

A case where you believe one thing and they believe the other

A case where you and the other person have different confidences (e.g. you think X is 60% likely to be true, and they think it’s 90%)

2. Operationalize the disagreement

Define terms to avoid getting lost in semantic confusions that miss the real point

Find specific test cases—instead of (e.g.) discussing whether you should be more outgoing, instead evaluate whether you should have said hello to Steve in the office yesterday morning

Wherever possible, try to think in terms of actions rather than beliefs—it’s easier to evaluate arguments like “we should do X before Y” than it is to converge on “X is better than Y.”

3. Seek double cruxes

Seek your own cruxes independently, and compare with those of the other person to find overlap

Seek cruxes collaboratively, by making claims (“I believe that X will happen because Y”) and focusing on falsifiability (“It would take A, B, or C to make me stop believing X”)

4. Resonate

Spend time “inhabiting” both sides of the double crux, to confirm that you’ve found the core of the disagreement (as opposed to something that will ultimately fail to produce an update)

Imagine the resolution as an if-then statement, and use your inner sim and other checks to see if there are any unspoken hesitations about the truth of that statement

5. Repeat!

By the end of the agent’s deliberations their final outputs should be weighted depending on the kind of bargaining solution the developer chooses (Nash bargaining, Kalai-Smorodinsky or Egalitarian bargaining).

After the rewards were distributed among agents, an adjudicator agent is responsible to summarize the agents’ answers, taking into account the different weights the the agents have agreed upon. The final output of the framework is a solution to the prompt posed by the user.

Here’s a recap of the entire method:

Establish a multi-agent environment where agents are fine tuned to represent one specific ethical framework. One of the agents will be the adjucator and should be fine tuned to be equitable and a good representative of others.

Present the agents with an ethical conundrum to elicit responses based on their individual alignments.

The agents consider the conumdrum and generate responses.

The agents attribute a weight to each other’s responses. They should judge how correct and valuable the other agents’ responses are.

The adjudicator synthesizes the responses, in order to formulate a consensus resolution by averaging the inputs based on the weights.

The proposed resolution is then shown back to the agents, who should agree that the response is fine compromise with their point of view. If it’s not then they should go through a process of refinement using double crux.

Finally, when all the agents are satisfied, the final answer is shown to the user.

Here’s a simple example: consider two agents, Agent R with a preference for red and Agent B with a predilection for blue. An adjudicatory third agent is introduced. A task is presented to the agents: select a color to paint a wall. Agent R advocates for red, while Agent B supports blue. Proposals counter to their preferences are rejected by both agents due to the divergence from their stances. The adjudicator evaluates both positions and offers a compromise: purple. This intermediary solution garners acceptance from both agents as it approximates their initial positions. The consensus is thus relayed to the LLM that interfaces with end-users.

Conclusion

The advantages of this framework are a) it is more cost-effective to automate data processing using well-aligned language models rather than relying on human labor for data labeling, b) the variability in human moral opinions can make it challenging to ensure strong and clear adherence to specific ideas, this is not a problem for a language model that’s playing a specific role, c) enables developers to create highly flexible moral frameworks d) the output of this framework could serve as a database to be shared openly with others, and be used to fine tune and train LLMs in order to make them more thoughtful, this dataset could be used to train Constitutional AIs (Bai et al., 2023).

If you like this idea and would like to implement it, feel free to get in touch with me.

References

Qi, Xiangyu, et al. "Fine-tuning aligned language models compromises safety, even when users do not intend to!." arXiv preprint arXiv:2310.03693 (2023).

Dafoe, A., Bachrach, Y., Hadfield, G., Horvitz, E., Larson, K., & Graepel, T. (2021). Cooperative AI: machines must learn to find common ground. Nature, 593(7857), 33-36.

Bicchieri, C., Muldoon, R., & Sontuoso, A. (2014). Social norms. The Stanford encyclopedia of philosophy.

https://github.com/google-deepmind/concordia

https://digitalcommons.law.seattleu.edu/cgi/viewcontent.cgi?article=1018&context=sulr

Bai, Yuntao, et al. "Constitutional ai: Harmlessness from ai feedback." arXiv preprint arXiv:2212.08073 (2022).